Sonification of neural data: my experience and outlook

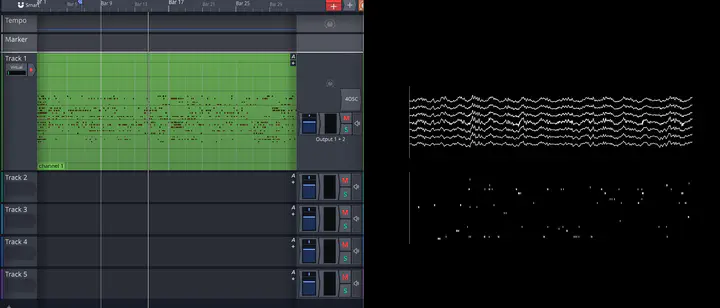

Left: spike-based MIDI in a digital audio workstation (DAW) interface. Right: visualization of LFP and spiking data.

Left: spike-based MIDI in a digital audio workstation (DAW) interface. Right: visualization of LFP and spiking data.

As explained here, in 2022 I had the opportunity to participate in a project bringing together neuroscience and fine arts to engage the public. In this post I want to share my process and what I learned; finding the right tools wasn’t easy since this is such a niche area. I also want to share ideas of what I think could be cool future developments for sonifying neural data.

Sonification process

Armed with hippocampal LFP and spikes from the Annabelle Singer lab at Georgia Tech, I set out to sonify spikes from sorted place cells in the hope of hearing replay events as sweeps up or down a scale or arpeggio.

I tried multiple Python packages for dealing with MIDI, incluing mido, miditime, and midiutil, and settled on music21 as the ideal tool to write MIDIs.

The others were harder to use and/or rounded note times to the nearest 1/16th note (or something like that), which was not ideal for representing the irregularity of spikes.

music21 was able to write MIDI files as well as play them right from a Jupyter notebook.

I tried mapping place cells (around a circular track) to major, whole-tone, and pentatonic scales, as well as an ascending tonic-descending dominant arpeggio.

For these MIDI files to be interesting, they needed to be played. Thus I was introduced to the world of digital audio workstations (DAWs). My collaborator, Timothy Min, used the Ableton Live DAW, combined with Max for real-time processing of data. Looking for free, open-source alternatives, I landed on Tracktion’s Waveform Free, which worked pretty well. All I needed to do is import the MIDI file and choose an instrument.

I didn’t find a good way to incorporate data in Waveform other than through MIDI files. It had “automation curves,” which I wanted to use so that theta power, for example, could control some parameter like volume or reverb. However, key points could only be set by hand. A workaround I discovered was to open the project file in a text editor and insert programmatically generated XML at the appropriate location. The “right” way to work with data is with Max, though. Or the free/open-source alternative I found called Pure Data. It’s not super intuitive so I didn’t get very far with it, but it looks like it could be integrated straight into a DAW via a plugin.

Outlook on neural data sonification

From a few brief web searches and my own experiences, I am aware of only a few other publicly available examples of sonification of neural data.

- This is the best one! Doing almost exactly what I sought to do with hippocampus data, but much better, Quorumetrix sonifies LFP and maps place cell-sorted spikes onto different notes. They also include anatomical and analytical 3D visualizations of the brain, neurons, and spikes, and color-code each cell type. Quorumetrix also has other videos sonifying and visualizing neural data. This one sonifies spikes of neurons in visual cortex as well as synaptic inputs.

- Using EEG as an instrument, dating back to Alvin Lucier’s Music for Solo Performer in 1965. More recent work along this vein includes that of Grace Leslie at Georgia Tech.

- Neuralink’s snout boop demo appears to map the number of spikes in each time bin to a note on a pentatonic scale. (fun tangential Twitter thread)

- Panagiota Papchristodoulou’s master’s thesis sonifies variables of the connectome in real-time as the user explores different regions of the brain.

I’ve had some ideas for music based on or inspired by neuroscience data and models which as far as I know haven’t been pursued:

- Using a neuron as an instrument

- Velocity would map to input current, yielding a train of spikes that are more or less frequent, rather than changing the volume of individual spikes.

- Making parameters of the neuron configurable, you could get different firing patterns such as bursting and adaptation

- Creating a DAW plugin that does this, allowing you to play a neuron as a MIDI instrument, would be awesome.

- A simpler thing would be to just simulate a Poisson or Hawkes process with a velocity-determined rate

- Simulate music by mapping chords to different assemblies of neurons.

- It would be interesting to move from one chord to another by simulating movement through a dynamical system with an attractor state at each chord.

- Activity from different regions could be played with different instruments

- Data from right and left hemispheres could map to right and left channels.